Why Is Content Moderation Necessary? An In-Depth Exploration

Understanding the Importance of Content Moderation

In an age where digital communication and social media are central to our daily lives, content moderation plays a vital role in maintaining safe and productive online spaces. From identifying harmful language to preventing misinformation, content moderation has a significant impact. This section delves into the core reasons behind the necessity of content moderation in online environments.

1. Protecting Users from Harmful Content

One of the primary goals of content moderation is to shield users from potentially harmful or offensive content. This includes hate speech, graphic violence, explicit material, and harassment. By filtering and removing these elements, platforms ensure a safer experience for all users, particularly vulnerable groups like children and teenagers.

2. Maintaining Brand Reputation

Content moderation is essential for brands and businesses, helping them safeguard their reputation. Companies that operate platforms with user-generated content need moderation to avoid association with negative, offensive, or inflammatory posts that could alienate customers or damage their image.

Balancing Free Speech and User Safety

While content moderation is crucial, it must be balanced with respect for free expression. This section discusses the challenges platforms face in maintaining this balance.

1. The Fine Line Between Moderation and Censorship

Moderating content involves determining what is acceptable without infringing upon free speech rights. Content moderation teams often establish guidelines that aim to curb hate speech or misinformation while respecting diverse viewpoints and cultural sensitivities.

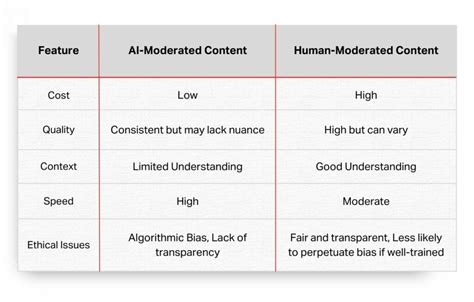

2. Algorithm vs. Human Moderation

There are both automated and manual methods for content moderation, each with strengths and weaknesses. While algorithms offer efficiency and scalability, human moderators provide the nuanced understanding necessary to interpret complex language and cultural contexts.

The Different Types of Content Moderation

Content moderation isn’t a one-size-fits-all approach; there are various methods used to keep online spaces safe and compliant. This section covers the different forms of moderation, each designed to address specific aspects of online content.

1. Pre-Moderation

Pre-moderation involves screening user content before it is published. While effective in filtering inappropriate content, it may slow down content posting and discourage user engagement due to delays.

2. Post-Moderation

Post-moderation allows content to be published immediately and reviewed afterward. This approach provides a balance between user experience and safety, enabling quick responses to issues without extensive delays.

Ethical Considerations in Content Moderation

Content moderation can involve ethical dilemmas, especially in decisions that affect free speech, censorship, and privacy. In this section, we’ll look at some of the most prominent ethical concerns moderators face today.

1. Privacy Concerns

Moderating content may require access to private information, especially in cases of reported harassment or illegal content. Platforms must take care to respect user privacy and follow data protection laws while enforcing community guidelines.

2. Bias in Moderation

Algorithmic and human biases can sometimes affect content moderation decisions. Platforms must regularly assess and adjust moderation practices to minimize cultural, racial, and political biases that could affect user experience unfairly.

The Role of AI in Content Moderation

AI has become instrumental in content moderation, providing tools that enable real-time detection of harmful content across vast digital platforms. In this section, we’ll explore the benefits and challenges associated with AI in moderation.

1. Scalability and Speed

AI enables rapid analysis and moderation of vast amounts of content, making it possible for platforms to process thousands of posts per second. This scalability is critical for large platforms with millions of active users.

2. Challenges with AI Moderation

Despite its advantages, AI faces limitations in understanding context, cultural nuances, and sarcasm. This section discusses ways platforms can improve AI systems to achieve more effective and accurate moderation.

Table Summary

| Topic | Description |

|---|---|

| User Protection | Ensures safety from harmful content such as hate speech and harassment. |

| Free Speech Balance | Moderation that respects diverse viewpoints while filtering harmful content. |

| Types of Moderation | Includes pre-moderation, post-moderation, reactive moderation, etc. |

| Ethical Concerns | Addresses privacy, bias, and censorship issues within moderation practices. |

| AI in Moderation | Scalable and fast but faces limitations in understanding context. |

FAQ

What is content moderation?

Content moderation is the process of monitoring and managing user-generated content to ensure it aligns with platform policies and community guidelines.

Why is content moderation necessary?

Content moderation protects users from harmful content, maintains brand reputation, and creates a safe online environment.

How does AI help in content moderation?

AI enables scalable and fast content analysis, allowing platforms to manage high volumes of content efficiently.

What are the types of content moderation?

Types include pre-moderation, post-moderation, reactive moderation, and distributed moderation, each serving different functions.

What challenges do content moderators face?

Moderators face ethical challenges like balancing free speech, managing biases, and protecting user privacy.

Can content moderation limit free speech?

Content moderation must balance user safety and free expression, avoiding censorship while removing harmful content.

How does content moderation protect brand reputation?

By filtering offensive or inappropriate content, brands avoid negative associations that can damage their reputation.